GIUSEPPE BOCCIGNONE1 , DONATELLO CONTE2 ,VITTORIO CUCULO1 , ALESSANDRO D’AMELIO1 , GIULIANO GROSSI1 , and RAFFAELLALANZAROTTI1

1Dipartimento di Informatica - Universitàdegli Studi di Milano, Via Celoria 18, I-20133 Milano, Italy (e-mail:giuseppe.boccignone@unimi.it, vittorio.cuculo@unimi.it, alessandro.damelio@unimi.it,giuliano.grossi@unimi.it, raffaella.lanzarotti@unimi.it)

2Université de Tours, Laboratoired’Informatique Fondamentale et Appliquée de Tours (LIFAT - EA 6300)

64 Avenue Jean Portalis, 37000 Tours,France (e-mail: donatello.conte@univ-tours.fr)

Corresponding author: Giuliano Grossi(e-mail: giuliano.grossi@unimi.it).

This work has been supported by FondazioneCariplo, through the project “Stairway to elders: bridging space, time andemotions in their social environment for wellbeing”, grant no. 2018-0858.

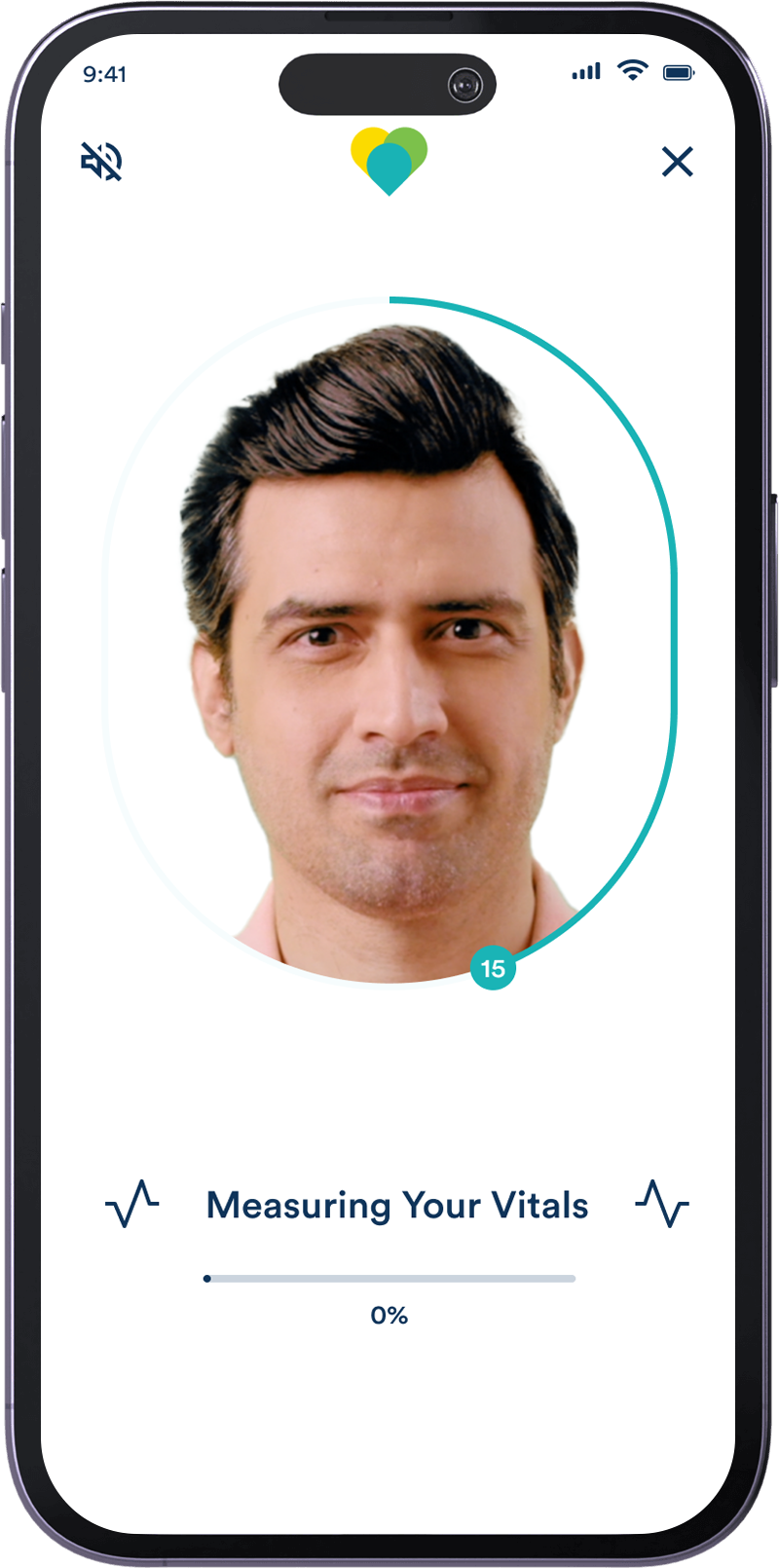

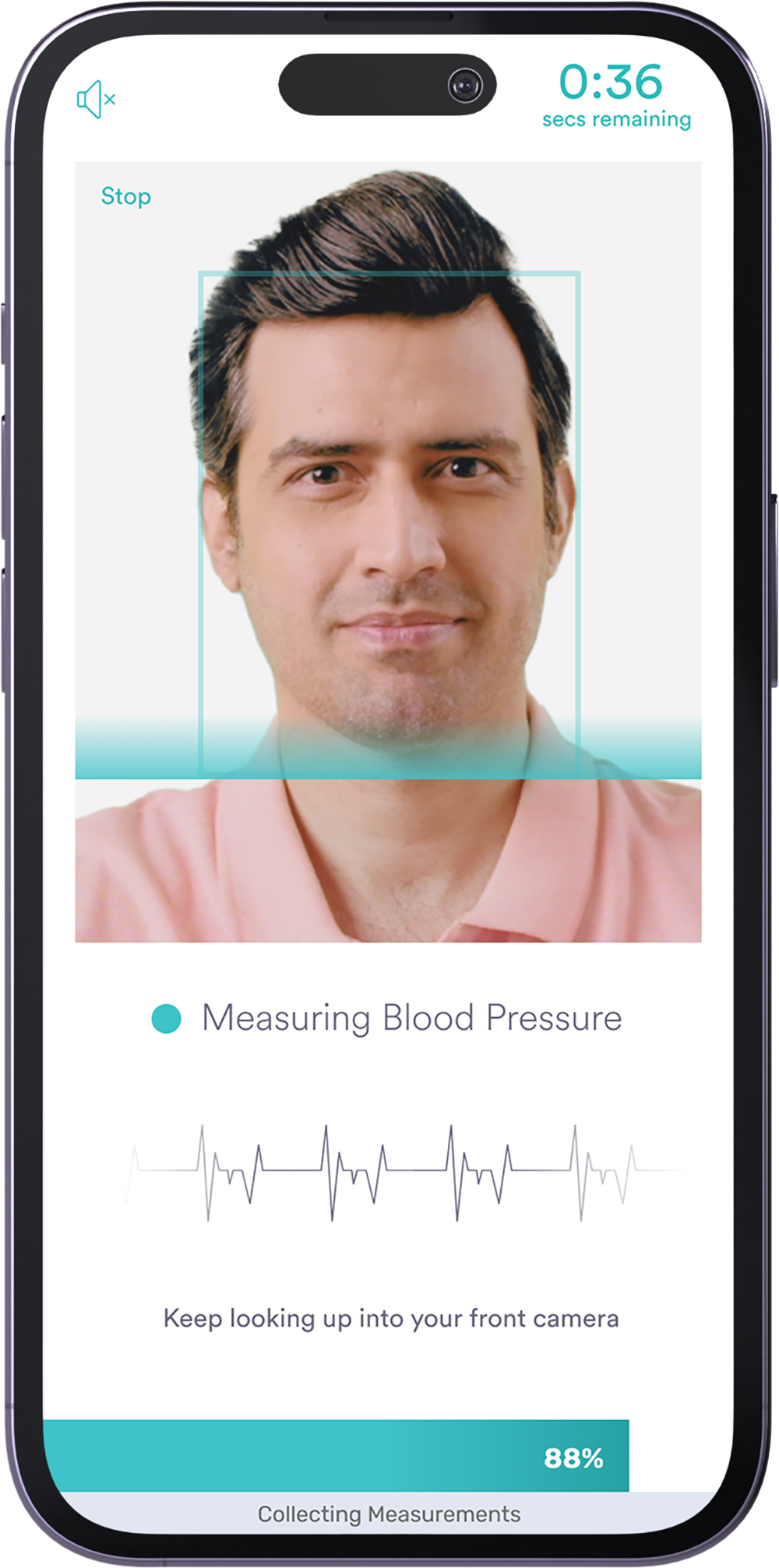

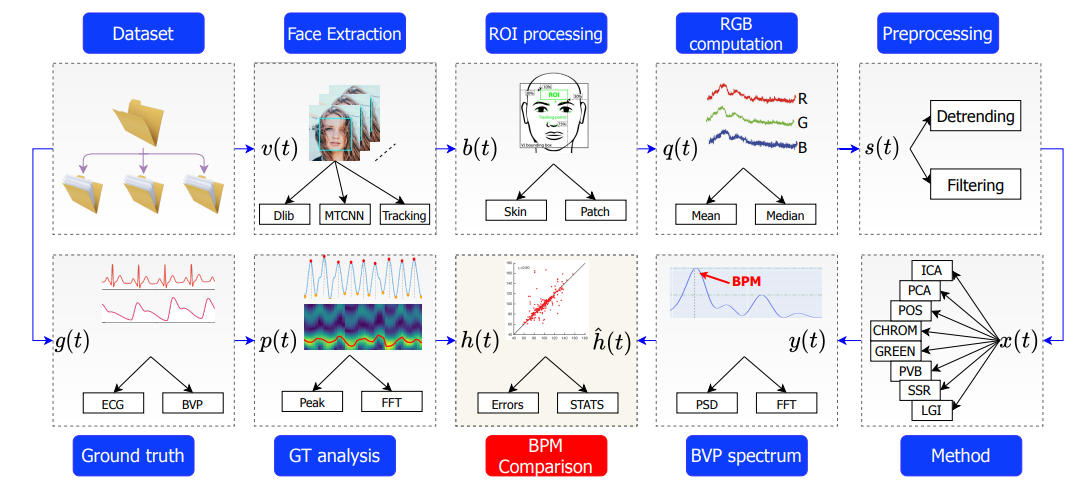

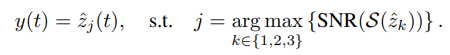

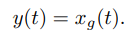

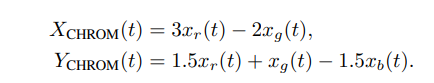

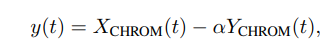

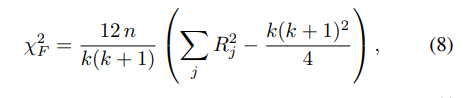

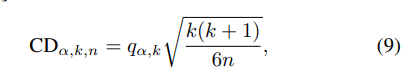

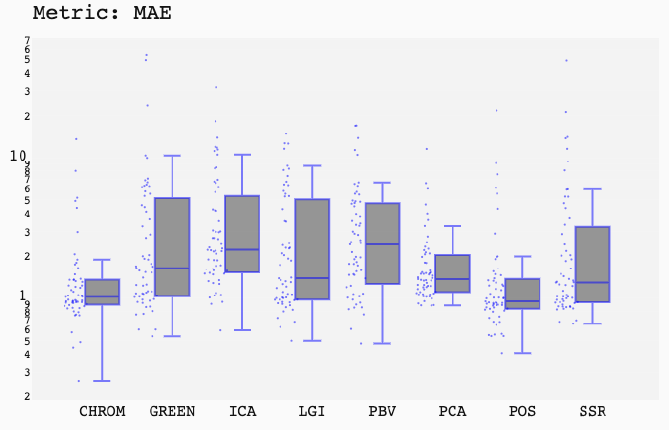

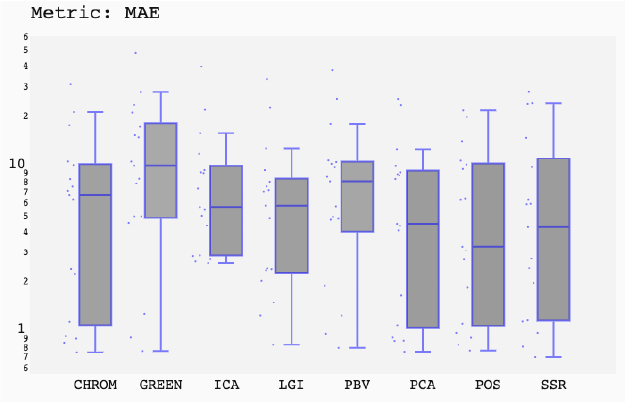

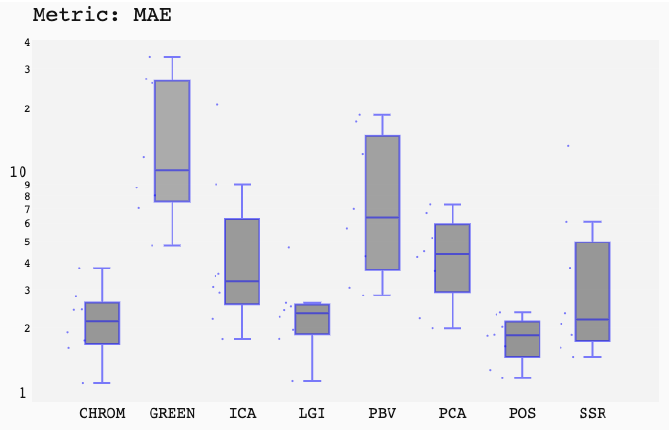

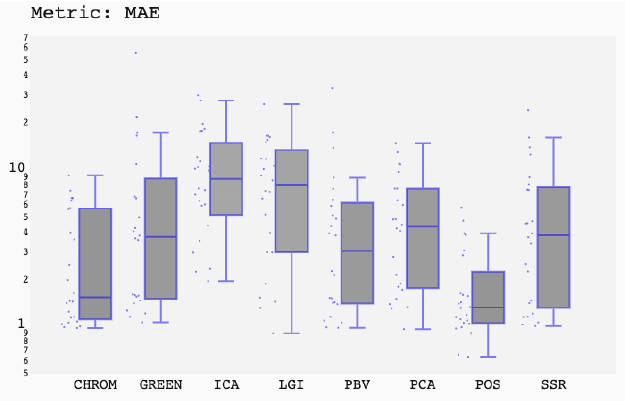

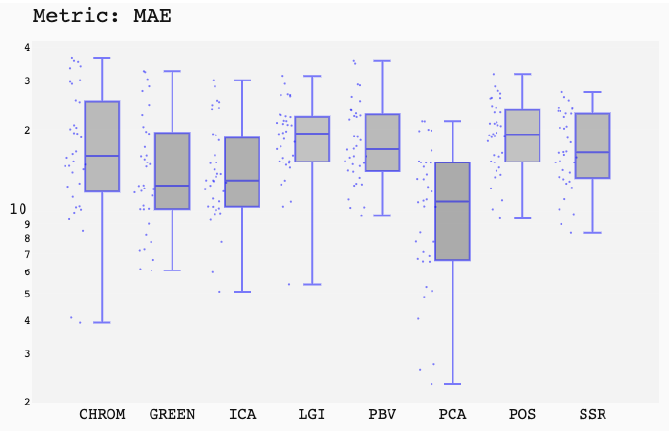

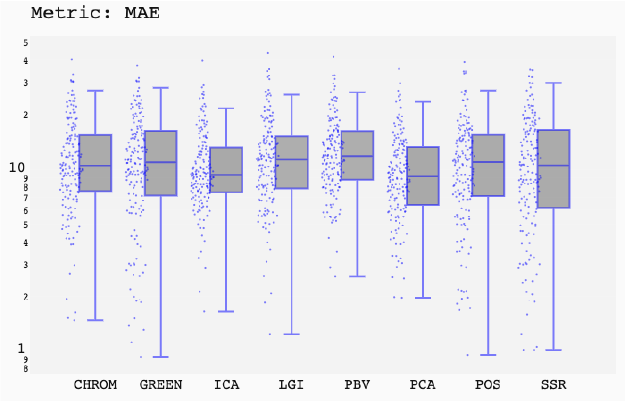

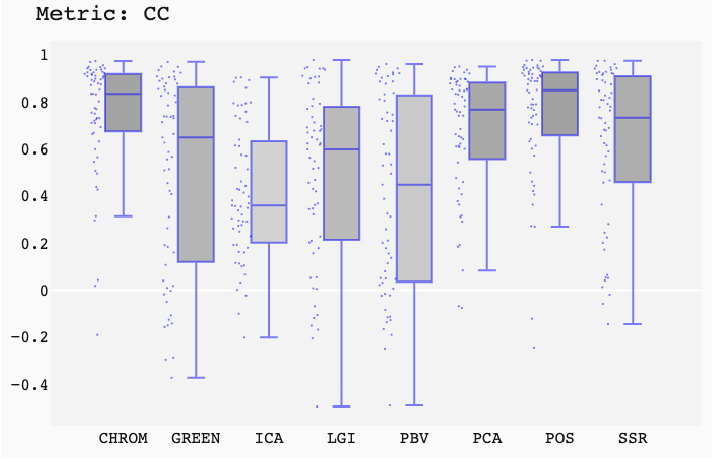

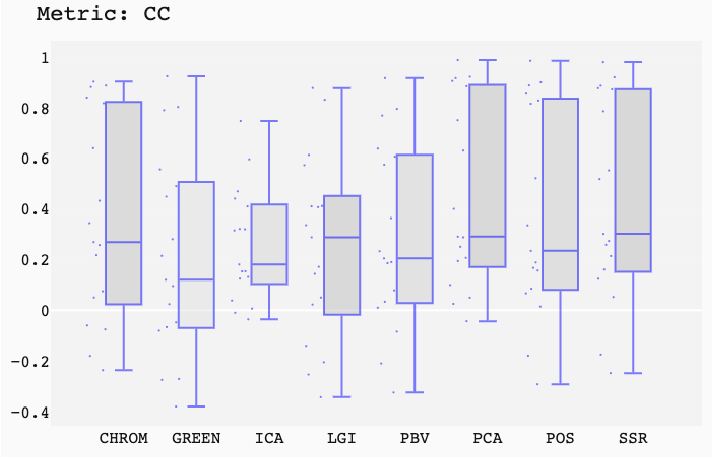

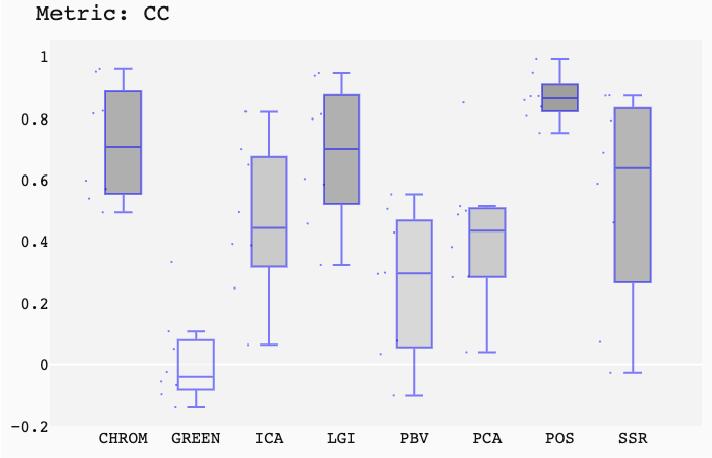

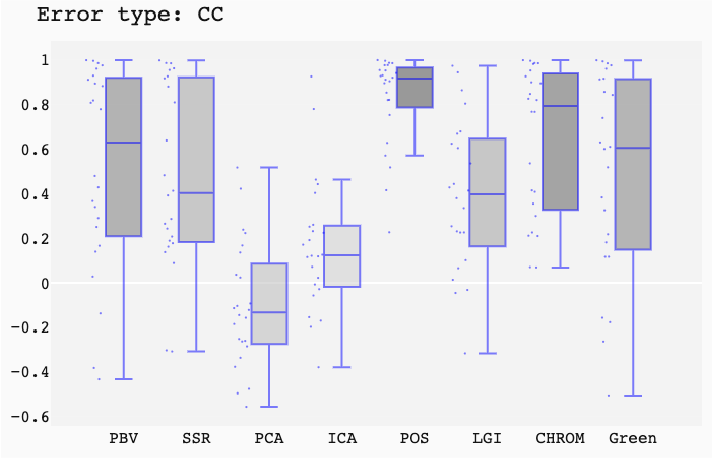

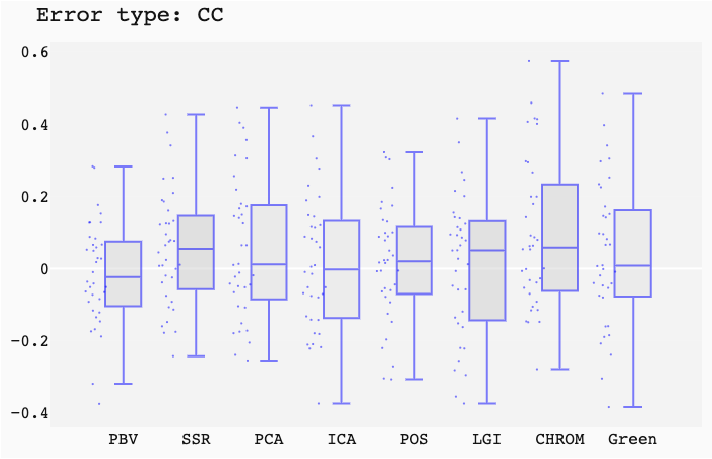

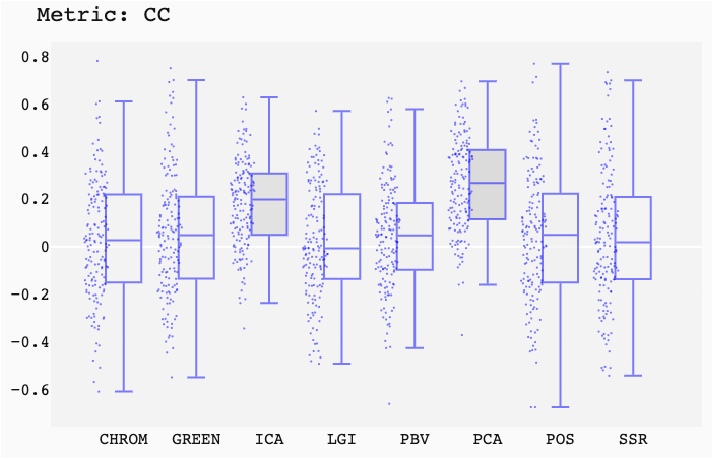

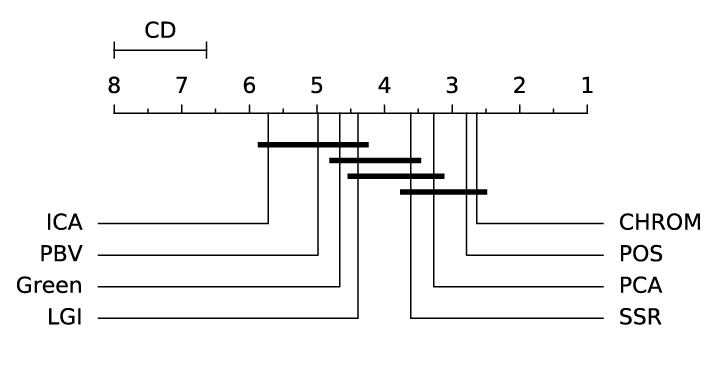

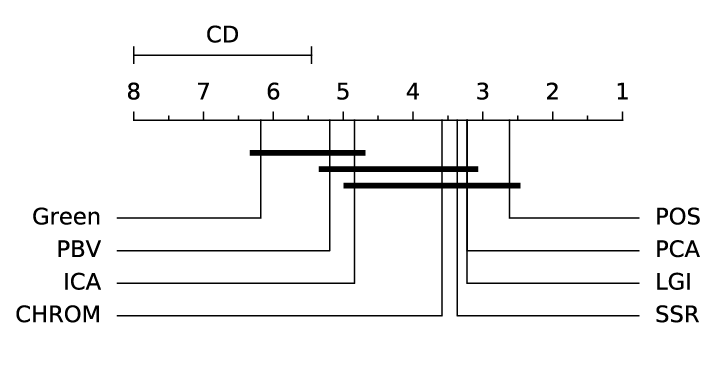

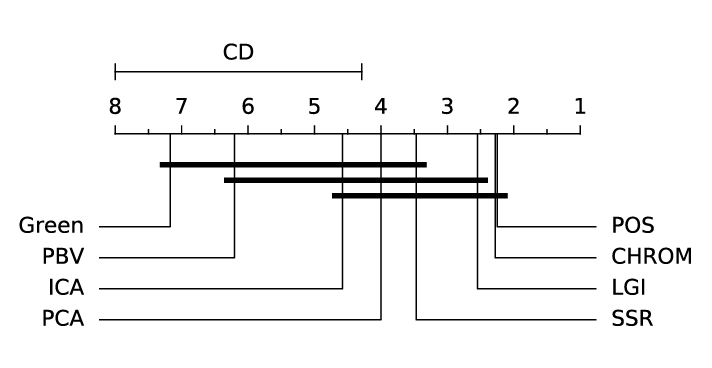

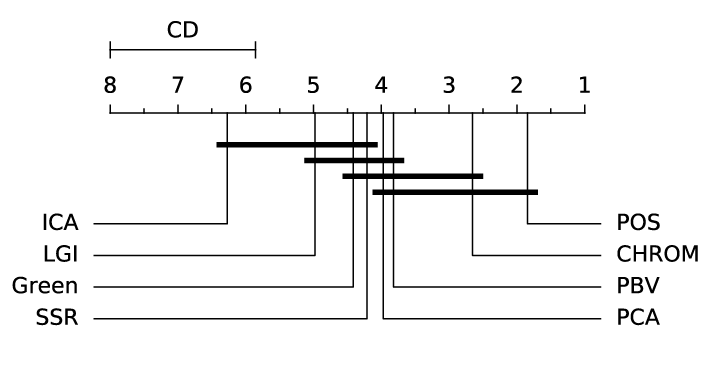

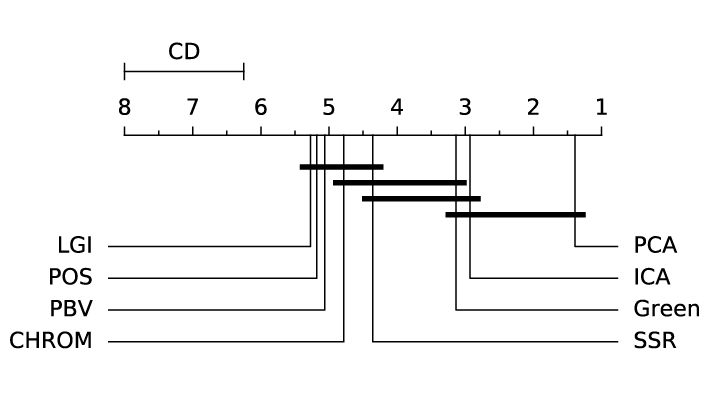

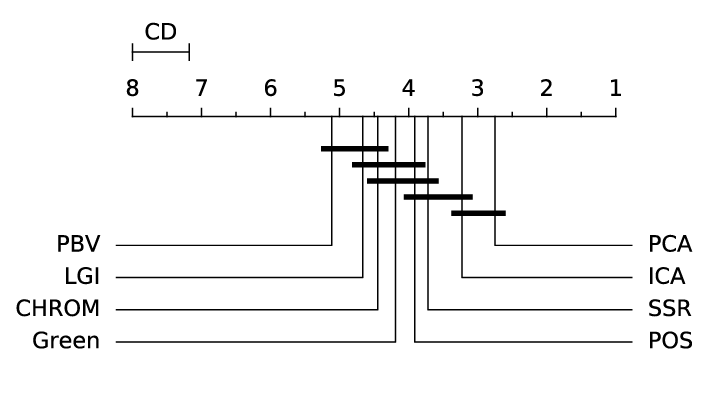

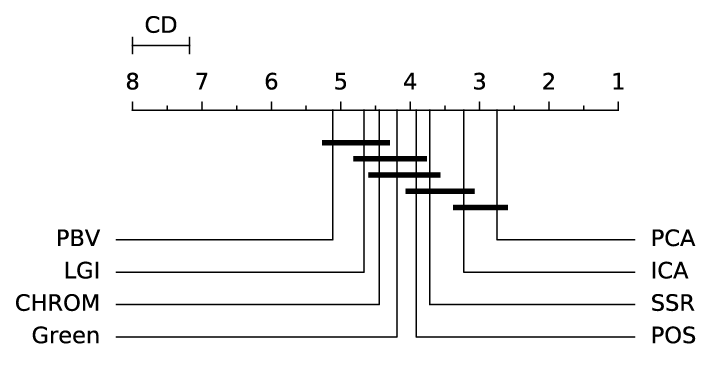

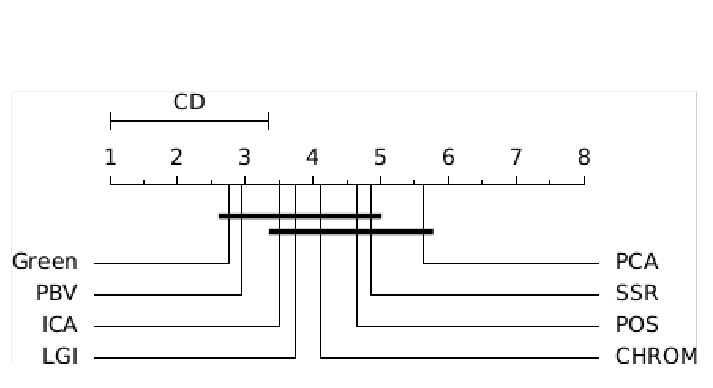

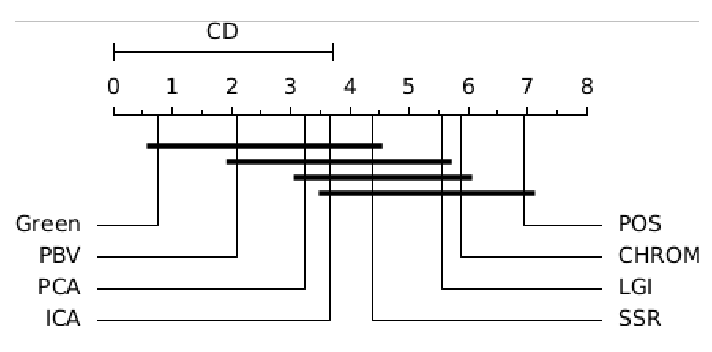

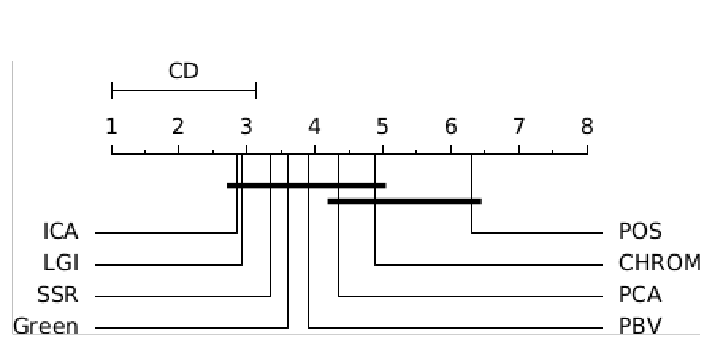

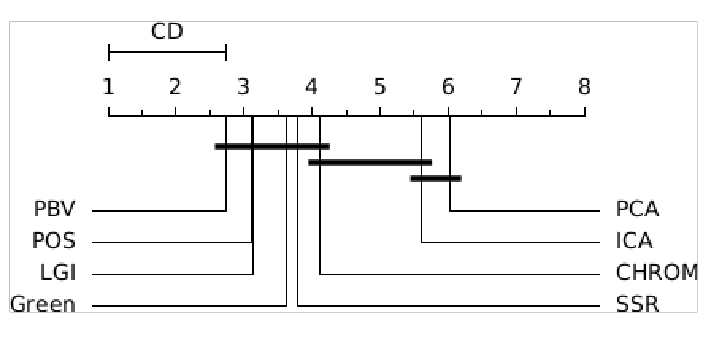

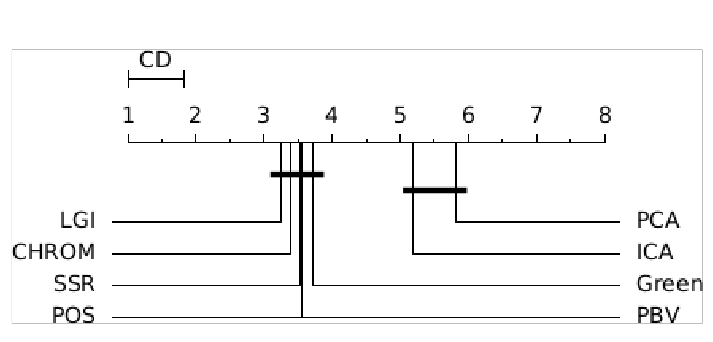

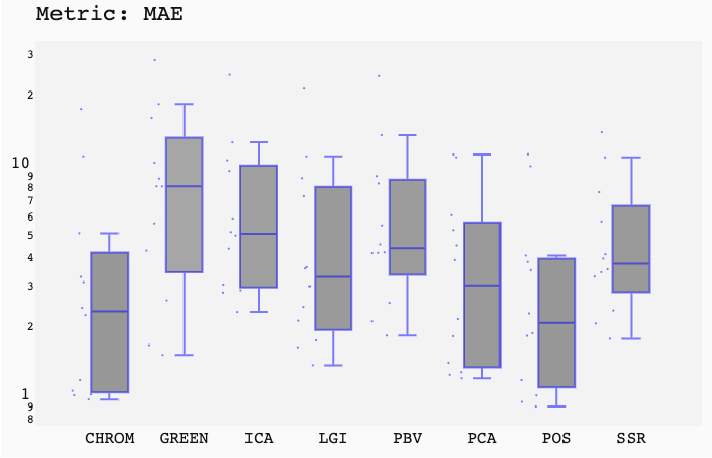

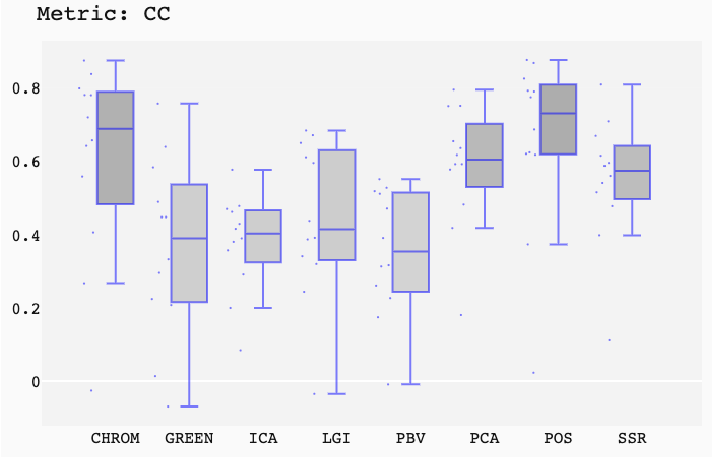

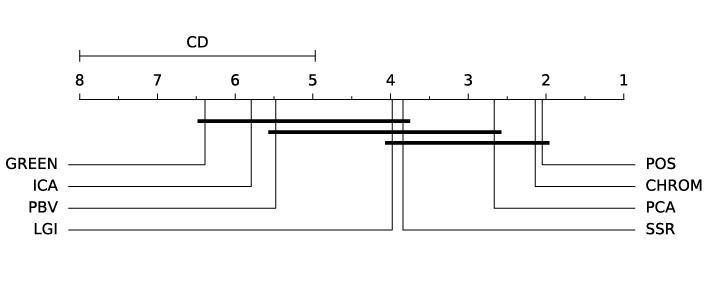

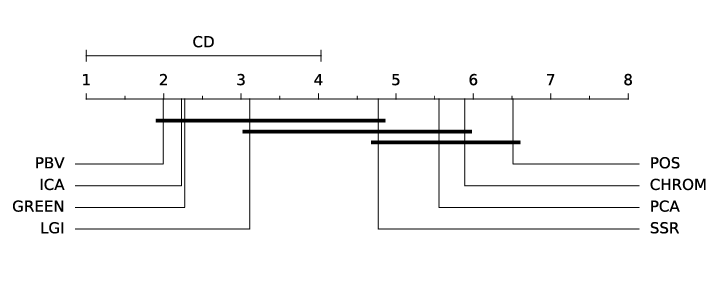

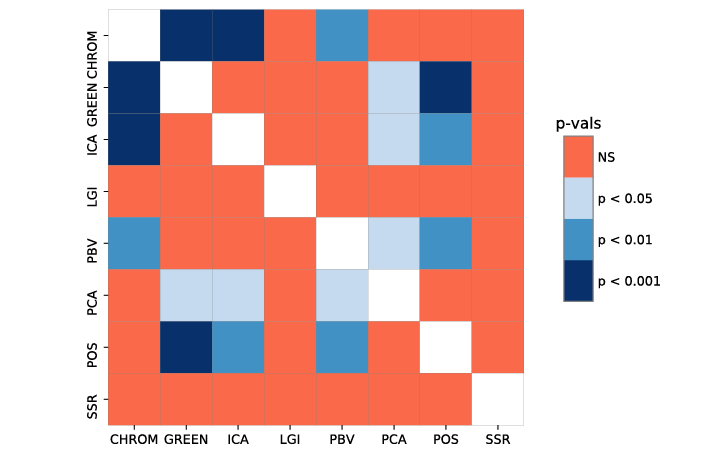

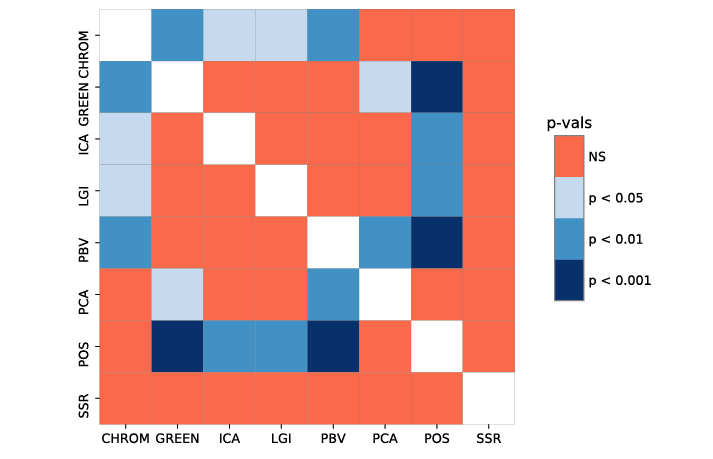

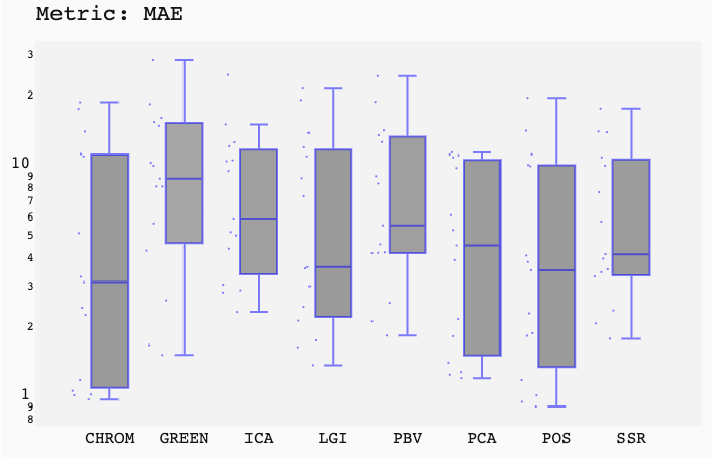

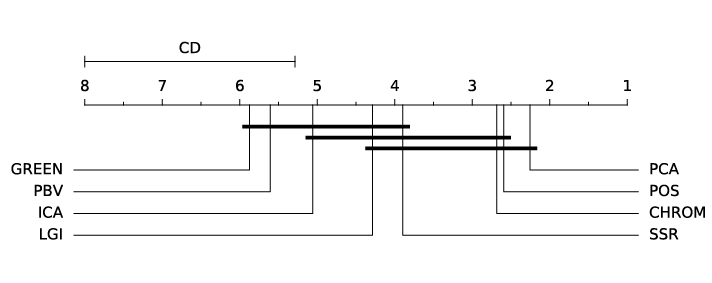

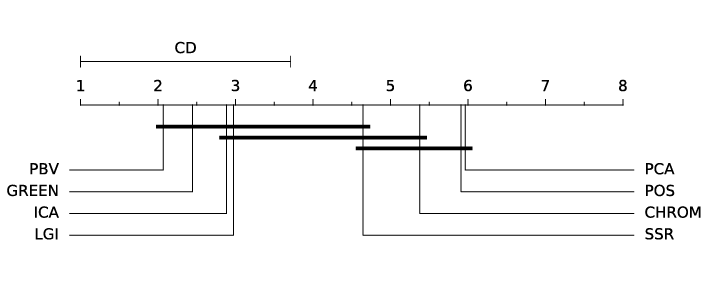

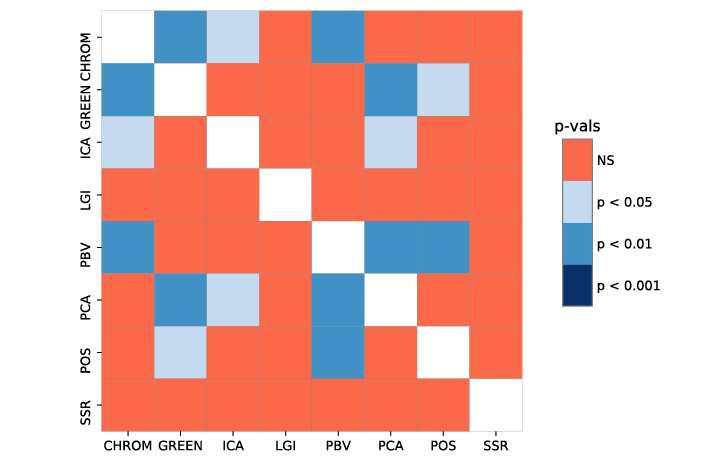

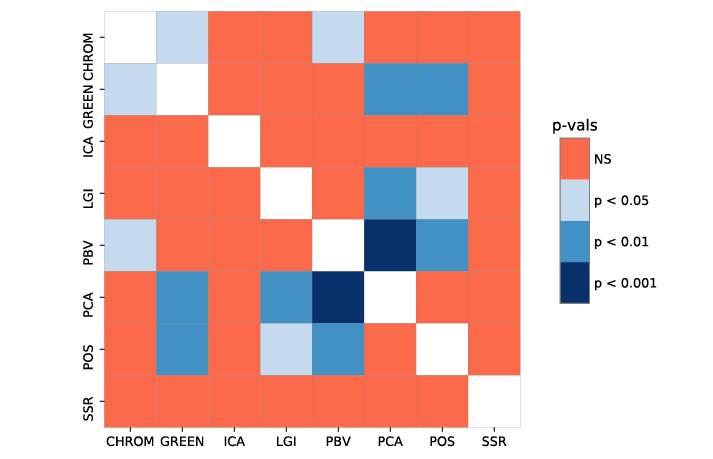

ABSTRACT This paper presents a comprehensive framework for studying methods of pulse rate estimation relying on remote photoplethysmography (rPPG). There has been a remarkable development ofrPPG techniques in recent years, and the publication of several surveys too, yet a sound assessment of their performance has been overlooked at best, whether not undeveloped. The methodological rationale behind the framework we propose is that in order to study, develop and compare new rPPG methods in a principled and reproducible way, the following conditions should be met: i) a structured pipeline to monitor rPPG algorithms’ input, output, and main control parameters; ii) the availability and the use of multiple datasets;iii) a sound statistical assessment of methods’ performance. The proposed framework is instantiated in the form of a Python package named pyVHR (short for Python tool for Virtual Heart Rate), which is made freely available on GitHub (github.com/phuselab/pyVHR). Here, to substantiate our approach, we evaluate eight well-known rPPG methods, through extensive experiments across five public video datasets, and subsequent nonparametric statistical analysis. Surprisingly, performances achieved by the four best methods, namely POS, CHROM, PCA and SSR, are not significantly different from a statistical standpoint highlighting the importance of evaluate the different approaches with a statistical assessment.

INDEX TERMS

Remote photoplethysmography (rPPG), Python package, Statistical analysis, non-parametric statistical test, pulse rate estimation